Science Is Getting Less Bang for Its Buck

Despite vast increases in the time and money spent on research, progress is barely keeping pace with the past. What went wrong?

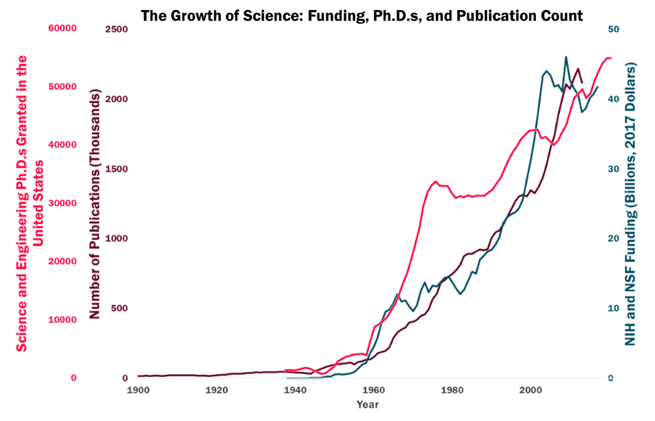

The writer Stewart Brand once wrote that “science is the only news.” While news headlines are dominated by politics, the economy, and gossip, it’s science and technology that underpin much of the advance of human welfare and the long-term progress of our civilization. This is reflected in an extraordinary growth in public investment in science. Today, there are more scientists, more funding for science, and more scientific papers published than ever before:

On the surface, this is encouraging. But for all this increase in effort, are we getting a proportional increase in our scientific understanding? Or are we investing vastly more merely to sustain (or even see a decline in) the rate of scientific progress?

It’s surprisingly difficult to measure scientific progress in meaningful ways. Part of the trouble is that it’s hard to accurately evaluate how important any given scientific discovery is.

Consider the early experiments on what we now call electricity. Many of these experiments seemed strange at the time. In one such experiment, scientists noticed that after rubbing amber on a cat’s fur, the amber would mysteriously attract objects such as feathers, for no apparent reason. In another experiment, a scientist noticed that a frog’s leg would unexpectedly twitch when touched by a metal scalpel.

Even to the scientists doing these experiments, it wasn’t obvious whether they were unimportant curiosities or a path to something deeper. Today, with the benefit of more than a century of hindsight, they look like epochal experiments, early hints of a new fundamental force of nature.

But even though it can be hard to assess the significance of scientific work, it’s necessary to make such assessments. We need these assessments to award science prizes, and to decide which scientists should be hired or receive grants. In each case, the standard approach is to ask independent scientists for their opinion of the work in question. This approach isn’t perfect, but it’s the best system we have.

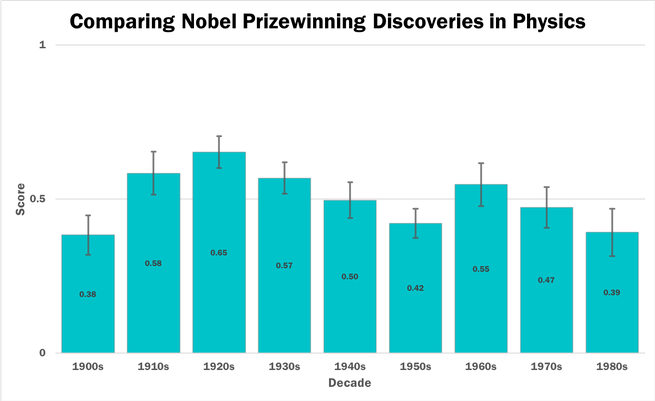

With that in mind, we ran a survey asking scientists to compare Nobel Prize–winning discoveries in their fields. We then used those rankings to determine how scientists think the quality of Nobel Prize–winning discoveries has changed over the decades.

As a sample survey question, we might ask a physicist which was a more important contribution to scientific understanding: the discovery of the neutron (the particle that makes up roughly half the ordinary matter in the universe) or the discovery of the cosmic-microwave-background radiation (the afterglow of the Big Bang). Think of the survey as a round-robin tournament, competitively matching discoveries against each other, with expert scientists judging which is better.

For the physics prize, we surveyed 93 physicists from the world’s top academic physics departments (according to the Shanghai Rankings of World Universities), and they judged 1,370 pairs of discoveries. The bars in the figure below show the scores for each decade. A decade’s score is the likelihood that a discovery from that decade was judged as more important than discoveries from other decades. Note that work is attributed to the year in which the discovery was made, not when the subsequent prize was awarded.

The first decade has a poor showing. In that decade, the Nobel Committee was still figuring out exactly what the prize was for. There was, for instance, a prize for a better way of illuminating lighthouses and buoys at sea. That’s good news if you’re on a ship, but it scored poorly with modern physicists. But by the 1910s, the prizes were mostly awarded for things that accord with the modern conception of physics.

A golden age of physics followed, from the 1910s through the 1930s. This was the time of the invention of quantum mechanics, one of the greatest scientific discoveries of all time, a discovery that radically changed our understanding of reality. It also saw several other revolutions: the invention of X-ray crystallography, which let us probe the atomic world; the discovery of the neutron and of antimatter; and the discovery of many fundamental facts about radioactivity and the nuclear forces. It was one of the great periods in the history of science.

Following that period, there was a substantial decline, with a partial revival in the 1960s. That was due to two discoveries: the cosmic-microwave-background radiation, and the standard model of particle physics, our best theory of the fundamental particles and forces making up the universe. Even with those discoveries, physicists judged every decade from the 1940s through the 1980s as worse than the worst decade from the 1910s through 1930s. The very best discoveries in physics, as judged by physicists themselves, became less important.

Our graph stops at the end of the 1980s. The reason is that in recent years, the Nobel Committee has preferred to award prizes for work done in the 1980s and 1970s. In fact, just three discoveries made since 1990 have been awarded Nobel Prizes. This is too few to get a good quality estimate for the 1990s, and so we didn’t survey those prizes.

However, the paucity of prizes since 1990 is itself suggestive. The 1990s and 2000s have the dubious distinction of being the decades over which the Nobel Committee has most strongly preferred to skip, and instead award prizes for earlier work. Given that the 1980s and 1970s themselves don’t look so good, that’s bad news for physics.

Many reasonable objections can be leveled at our survey. Maybe the surveyed physicists are somehow biased, or working with an incomplete understanding of the prizewinning discoveries. As discussed earlier, it’s hard to pin down what it means for one discovery to be more important than another. And yet, scientists’ judgments are still the best way we have to compare discoveries.

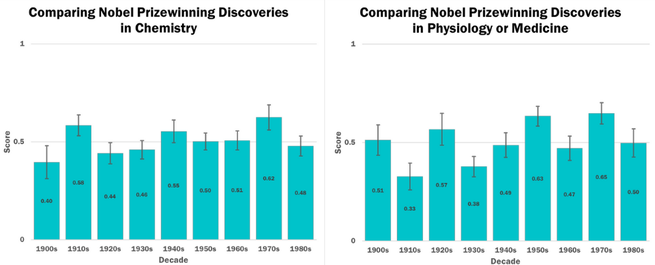

Even if physics isn’t doing so well, perhaps other fields are doing better? We carried out similar surveys for the Nobel Prize for chemistry and the Nobel Prize for physiology or medicine. Here are the scores:

The results are slightly more encouraging than physics, with perhaps a small improvement in the second half of the 20th century. But it is small. As in physics, the 1990s and 2000s are omitted, because the Nobel Committee has strongly preferred earlier work: Fewer prizes were awarded for work done in the 1990s and 2000s than over any similar window in earlier decades.

The picture this survey paints is bleak: Over the past century, we’ve vastly increased the time and money invested in science, but in scientists’ own judgement, we’re producing the most important breakthroughs at a near-constant rate. On a per-dollar or per-person basis, this suggests that science is becoming far less efficient.

Now, a critic might respond that the quality of Nobel Prize discoveries isn’t the same as the overall rate of progress in science. There are certainly many limitations of this measure. Parts of science are not covered by the Nobel Prizes, especially newer areas like computer science. The Nobel Committee occasionally misses important work. Perhaps some bias means scientists are more likely to venerate older prizes. And perhaps what matters more is the bulk of scientific work, the ordinary discoveries that make up most of science.

We recognize these limitations: The survey results are striking, but provide only a partial picture. However, we’ll soon see supporting evidence suggesting that it’s getting much harder to make important discoveries across the board. It’s requiring larger teams and far more extensive scientific training, and the overall economic impact is getting smaller. Taken together, these results suggest strong diminishing returns to our scientific efforts.

When we report these diminishing returns to colleagues, they sometimes tell us that this is nonsense, and insist that science is going through a golden age. They point to amazing recent discoveries, such as the Higgs particle and gravitational waves, as evidence that science is in better shape than ever.

These are, indeed, astonishing discoveries. But previous generations also made discoveries that were equally, if not more, remarkable. Compare, for example, the discovery of gravitational waves to Einstein’s 1915 discovery of his general theory of relativity. Not only did general relativity predict gravitational waves, it also radically changed our understanding of space, time, mass, energy, and gravity. The discovery of gravitational waves, while enormously technically impressive, did much less to change our understanding of the universe.

And while the discovery of the Higgs particle is remarkable, it pales beside the pantheon of particles discovered in the 1930s, including the neutron, one of the main constituents of our everyday world, and the positron, also known as the antielectron, which first revealed the shadowy world of antimatter. In a sense, the discovery of the Higgs particle is remarkable because it’s a return to a state of affairs common in the first half of the 20th century, but rare in recent decades.

Another common response is from people who say science is in better shape than ever because their own field is making great progress. We hear this most often about artificial intelligence (AI) and the CRISPR gene-editing technology in biology. But while AI, CRISPR, and similar fields are certainly moving fast, there have always been fields just as hot or hotter through the entire history of modern science.

Consider the progress of physics between 1924 and 1928. Over that time, physicists learned that the fundamental constituents of matter have both a particle and a wave nature; they formulated the laws of quantum mechanics, leading to Heisenberg’s uncertainty principle; they predicted the existence of antimatter; and many other things besides. As one of the leading protagonists, Paul Dirac, said, it was a time when “even second-rate physicists could make first-rate discoveries.”

For comparison, major discoveries in AI over the past few years include an improved ability to recognize images and human speech, and the ability to play games such as Go better than any human. These are important results, and we’re optimistic that work in AI will have a huge impact in the decades ahead. But it has taken far more time, money, and effort to generate these results, and it’s not clear they’re more significant breakthroughs than the reordering of reality uncovered in the 1920s.

Similarly, CRISPR has seen many breakthroughs over the past few years, including the modification of human embryos to correct a genetic heart disorder, and the creation of mosquitoes that can spread genes for malaria resistance through entire mosquito populations. But while such laboratory proofs-of-principle are remarkable, and the long-run potential of CRISPR is immense, such recent results are no more impressive than those of past periods of rapid progress in biology.

Why has science gotten so much more expensive, without producing commensurate gains in our understanding?

A partial answer to this question is suggested by work done by the economists Benjamin Jones and Bruce Weinberg. They’ve studied how old scientists are when they make their great discoveries. They found that in the early days of the Nobel Prize, future Nobel scientists were 37 years old, on average, when they made their prizewinning discovery. But in recent times that has risen to an average of 47 years, an increase of about a quarter of a scientist’s working career.

Perhaps scientists today need to know far more to make important discoveries. As a result, they need to study longer, and so are older, before they can do their most important work. That is, great discoveries are simply getting harder to make. And if they’re harder to make, that suggests there will be fewer of them, or they will require much more effort.

In a similar vein, scientific collaborations now often involve far more people than they did a century ago. When Ernest Rutherford discovered the nucleus of the atom in 1911, he published it in a paper with just a single author: himself. By contrast, the two 2012 papers announcing the discovery of the Higgs particle had roughly a thousand authors each. On average, research teams nearly quadrupled in size over the 20th century, and that increase continues today. For many research questions, it requires far more skills, expensive equipment, and a large team to make progress today.

If it’s true that science is becoming harder, why is that the case?

Suppose we think of science—the exploration of nature—as similar to the exploration of a new continent. In the early days, little is known. Explorers set out and discover major new features with ease. But gradually they fill in knowledge of the new continent. To make significant discoveries explorers must go to ever-more-remote areas, under ever-more-difficult conditions. Exploration gets harder. In this view, science is a limited frontier, requiring ever more effort to “fill in the map.” One day the map will be near complete, and science will largely be exhausted. In this view, any increase in the difficulty of discovery is intrinsic to the structure of scientific knowledge itself.

An archetype for this point of view comes from fundamental physics, where many people have been entranced by the search for a “theory of everything,” a theory explaining all the fundamental particles and forces we see in the world. We can only discover such a theory once. And if you think that’s the primary goal of science, then it is indeed a limited frontier.

But there’s a different point of view, a point of view in which science is an endless frontier, where there are always new phenomena to be discovered, and major new questions to be answered. The possibility of an endless frontier is a consequence of an idea known as emergence. Consider, for example, water. It’s one thing to have equations describing the way a single molecule of water behaves. It’s quite another to understand why rainbows form in the sky, or the crashing of ocean waves, or the origins of the dirty snowballs in space that we call comets. All these are “water,” but at different levels of complexity. Each emerges out of the basic equations describing water, but who would ever have suspected from those equations something so intricate as a rainbow or the crashing of waves?

The mere fact of emergent levels of behavior doesn’t necessarily imply that there will be a never-ending supply of new phenomena to be discovered, and new questions to be answered. But in some domains it seems likely. Consider, for example, that computer science began in 1936 when Alan Turing developed the mathematical model of computation we now call the Turing machine. That model was extremely rudimentary, almost like a child’s toy. And yet the model is mathematically equivalent to today’s computer: Computer science actually began with its “theory of everything.” Despite that, it has seen many extraordinary discoveries since: ideas such as the cryptographic protocols that underlie internet commerce and cryptocurrencies; the never-ending layers of beautiful ideas that go into programming language design; even, more whimsically, some of the imaginative ideas seen in the very best video games.

These are the rainbows and ocean waves and comets of computer science. What’s more, our experience of computing so far suggests that it really is inexhaustible, that it’s always possible to discover beautiful new phenomena, new layers of behavior which pose fundamental new questions and give rise to new fields of inquiry. Computer science appears to be open-ended.

In a similar way, it’s possible new frontiers will continue to open up in biology, as we gain the ability to edit genomes, to synthesize new organisms, and to better understand the relationship between an organism’s genome and its form and behavior. Something similar may happen in physics and chemistry too, with ideas such as programmable matter and new designer phases of matter. In each case, new phenomena pose new questions, in what may be an open-ended way.

So the optimistic view is that science is an endless frontier, and we will continue to discover and even create entirely new fields, with their own fundamental questions. If we see a slowing today, it is because science has remained too focused on established fields, where it’s becoming ever harder to make progress. We hope the future will see a more rapid proliferation of new fields, giving rise to major new questions. This is an opportunity for science to accelerate.

If science is suffering diminishing returns, what does that mean for our long-term future? Will there be fewer new scientific insights to inspire new technologies of the kind which have so reshaped our world over the past century? In fact, economists see evidence this is happening, in what they call the productivity slowdown.

When they speak of the productivity slowdown, economists are using “productivity” in a specialized way, though close to the everyday meaning: Roughly speaking, a worker’s productivity is the ingenuity with which things are made. So productivity grows when we develop technologies and make discoveries that make it easier to make things.

For instance, in 1909 the German chemist Fritz Haber discovered nitrogen fixation, a way of taking nitrogen from the air and turning it into ammonia. That ammonia could then, in turn, be used to make fertilizer. Those fertilizers allowed the same number of workers to produce far more food, and so productivity rose.

Productivity growth is a sign of an economically healthy society, one continually producing ideas that improve its ability to generate wealth. The bad news is that U.S. productivity growth is way down. It’s been dropping since the 1950s, when it was roughly six times higher than today. That means we see about as much change over a decade today as we saw in 18 months in the 1950s.

That may sound surprising. Haven’t we seen many inventions over the past decades? Isn’t today a golden age of accelerating technological change?

Not so, argue the economists Tyler Cowen and Robert Gordon. In their books The Great Stagnation and The Rise and Fall of American Growth, they point out that the early part of the 20th century saw the large-scale deployment of many powerful general-purpose technologies: electricity, the internal-combustion engine, radio, telephones, air travel, the assembly line, fertilizer, and many more.

By contrast, they marshal economic data suggesting that things haven’t changed nearly as much since the 1970s. Yes, we’ve had advances associated to two powerful general-purpose technologies: the computer and the internet. But many other technologies have improved only incrementally.

Think, for example, about the way automobiles, air travel, and the space program transformed our society between 1910 and 1970, expanding people’s experience of the world. By 1970 these forms of travel had reached something close to their modern form, and ambitious projects such as the Concorde and the Apollo Program largely failed to expand transportation further. Perhaps technologies like self-driving cars will lead to dramatic changes in transport in the future. But recent progress in transport has been incremental when compared to the progress of the past.

What’s causing the productivity slowdown? The subject is controversial among economists, and many different answers have been proposed. Some have argued that it’s merely that existing productivity measures don’t do a good job measuring the impact of new technologies. Our argument here suggests a different explanation, that diminishing returns to spending on science are contributing to a genuine productivity slowdown.

We aren’t the first to suggest that scientific discovery is showing diminishing returns. In his 1996 book The End of Science, the science writer John Horgan interviewed many leading scientists and asked them about prospects for progress in their own fields. The distinguished biologist Bentley Glass, who had written a 1971 article in Science arguing that the glory days of science were over, told Horgan:

It’s hard to believe, for me, anyway, that anything as comprehensive and earthshaking as Darwin’s view of the evolution of life or Mendel’s understanding of the nature of heredity will be easy to come by again. After all, these have been discovered!*

Horgan’s findings were not encouraging. Here is Leo Kadanoff, a leading theoretical physicist, on recent progress in science:

The truth is, there is nothing—there is nothing—of the same order of magnitude as the accomplishments of the invention of quantum mechanics or of the double helix or of relativity. Just nothing like that has happened in the last few decades.

Horgan asked Kadanoff whether that state of affairs was permanent. Kadanoff was silent, before sighing and replying: “Once you have proven that the world is lawful to the satisfaction of many human beings, you can’t do that again.”

But while many individuals have raised concerns about diminishing returns to science, there has been little institutional response. The meteorologist Kelvin Droegemeier, the current nominee to be President Donald Trump’s science adviser, claimed in 2016 that “the pace of discovery is accelerating” in remarks to a U.S. Senate committee. The problem of diminishing returns is mentioned nowhere in the 2018 report of the National Science Foundation, which instead talks optimistically of “potentially transformative research that will generate pioneering discoveries and advance exciting new frontiers in science.” Of course, many scientific institutions—particularly new institutions—do aim to find improved ways of operating in their own fields. But that’s not the same as an organized institutional response to diminishing returns.

Perhaps this lack of response is in part because some scientists see acknowledging diminishing returns as betraying scientists’ collective self-interest. Most scientists strongly favor more research funding. They like to portray science in a positive light, emphasizing benefits and minimizing negatives. While understandable, the evidence is that science has slowed enormously per dollar or hour spent. That evidence demands a large-scale institutional response. It should be a major subject in public policy, and at grant agencies and universities. Better understanding the cause of this phenomenon is important, and identifying ways to reverse it is one of the greatest opportunities to improve our future.

Methodology and sources: More details on our methodology and sources may be found in this appendix.

* This article previously misstated where Bentley Glass’s quote first appeared.