SkyKnit: How an AI Took Over an Adult Knitting Community

Ribald knitters teamed up with a neural-network creator to generate new types of tentacled, cozy shapes.

Janelle Shane is a humorist who creates and mines her material from neural networks, the form of machine learning that has come to dominate the field of artificial intelligence over the last half-decade.

Perhaps you’ve seen the candy-heart slogans she generated for Valentine’s Day: DEAR ME, MY MY, LOVE BOT, CUTE KISS, MY BEAR, and LOVE BUN.

Or her new paint-color names: Parp Green, Shy Bather, Farty Red, and Bull Cream.

Or her neural-net-generated Halloween costumes: Punk Tree, Disco Monster, Spartan Gandalf, Starfleet Shark, and A Masked Box.

Her latest project, still ongoing, pushes the joke into a new, physical realm. Prodded by a knitter on the knitting forum Ravelry, Shane trained a type of neural network on a series of over 500 sets of knitting instructions. Then, she generated new instructions, which members of the Ravelry community have actually attempted to knit.

“The knitting project has been a particularly fun one so far just because it ended up being a dialogue between this computer program and these knitters that went over my head in a lot of ways,” Shane told me. “The computer would spit out a whole bunch of instructions that I couldn’t read and the knitters would say, this is the funniest thing I’ve ever read.”

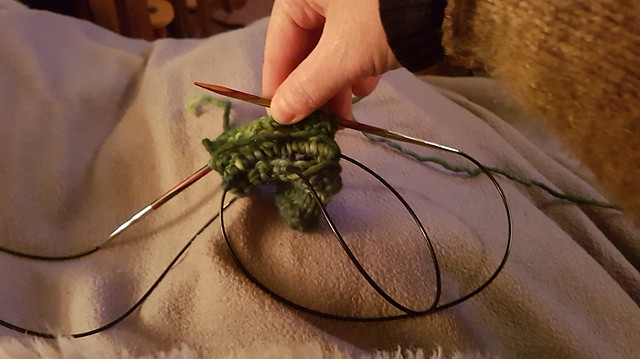

The human-machine collaboration created configurations of yarn that you probably wouldn’t give to your in-laws for Christmas, but they were interesting. The user citikas was the first to post a try at one of the earliest patterns, “reverss shawl.” It was strange, but it did have some charisma.

Shane nicknamed the whole effort “Project Hilarious Disaster.” The community called it SkyKnit.

The idea of using neural networks to do computer things has been around for decades. But it took until the last 10 years or so for the right mix of techniques, data sets, chips, and computing power to transform neural networks into deployable technical tools. There are many different kinds suited to different sorts of tasks. Some translate between different languages for Google. Others automatically label pictures. Still others are part of what powers Facebook’s News Feed software. In the tech world, they are now everywhere.

The different networks all attempt to model the data they’ve been fed by tuning a vast, funky flowchart. After you’ve created a statistical model that describes your real data, you can also roll the dice and generate new, never-before-seen data of the same kind.

How this works—like, the math behind it—is very hard to visualize because values inside the model can have hundreds of dimensions and we are humble three-dimensional creatures moving through time. But as the neural-network enthusiast Robin Sloan puts it, “So what? It turns out imaginary spaces are useful even if you can’t, in fact, imagine them.”

Out of that ferment, a new kind of art has emerged. Its practitioners use neural networks not to attain practical results, but to see what’s lurking in the these vast, opaque systems. What did the machines learn about the world as they attempted to understand the data they’d been fed? Famously, Google released DeepDream, which produced trippy visualizations that also demonstrated how that type of neural network processed the textures and objects in its source imagery.

Google’s David Ha has been working with drawings. Sloan is working with sentences. Allison Parrish makes poetry. Ross Goodwin has tried several writerly forms.

But all these experiments are happening inside the symbolic space of the computer. In that world, a letter is just a defined character. It is not dark ink on white paper in a certain shape. A picture is an arrangement of pixels, not oil on canvas.

And that’s what makes the knitting project so fascinating. The outputs of the software had to be rendered in yarn.

Knitting instructions are a bit like code. There are standard maneuvers, repetitive components, and lots of calculation involved. “My husband says knitting is just maths. It’s maths done with string and sticks. You have this many stitches,” said the Ravelry user Woolbeast in the thread about the project. “You do these things in these places that many times, and you have a design, or a shape.”

In practice, knitting patterns contain a lot of abbreviations like k and p, for knit and purl (the two standard types of stitches), st for stitches, yo for yarn over, or sl1 for “slip one stitch purl-wise.” The patterns tend to take a form like this:

row 1: sl1, kfb, k1 (4 sts) o

row 2: sl1, kfb, k to end of row (5 sts)

The neural network knows nothing of how these letters correspond to words like knit or the actual real-world action of knitting. It is just taking the literal text of patterns, and using them as strings of characters in its model of the data. Then, it’s spitting out new strings of characters, which are the patterns people tried to knit.

The project began on December 13 of last year, when a Ravelry user, JohannaB, suggested to Shane that her neural net could be taught to write knitting patterns. The community responded enthusiastically, like the user agadbois, who proclaimed, “I will absolutely teach a computer to knit!!! Or at least help one design a scarf (or whatever godforsaken mangled bit of fabric will come out of this).”

Over the next couple of weeks, they crept toward a data set they could use to build the model. First, they were able to access a fairly standardized set of patterns from Stitch-maps.com, a service run by the knitter J. C. Briar.

Then, Shane began to add submissions crowdsourced from Ravelry’s users. The latter data was messy and filled with oddities and even some NSFW knitted objects. When I expressed surprise at the ribaldry evident in the thread (Knitters! Who knew?), one Ravelry user wanted it noted that the particular forum on which the discussion took place (LSG) has a special role on the site. “LSG (lazy, stupid, and godless) is an 18+ group designed to be swearing-friendly,” the user LTHook told me. “The main forums are family-friendly, and the database tags mature patterns so people can tailor their browsing.”

Thus, the neural network was being fed all kinds of things from this particular LSG community. “A few notable new additions: Opus the Octopus, Dice Bag of Doom, Doctor Who TARDIS Dishcloth, and something merely called ‘The Impaler,’” Shane wrote on the forum. “The number of patterns with tentacles is now alarmingly high,” she said in another post.

When they hit 500 entries, Shane began training the neural network, and slowly feeding some of the new patterns back to the group. The instructions contained some text and some descriptions of rows that looked like actual patterns.

For example, here’s the first 4 rows from one set of instructions that the neural net generated and named “fishcock.”

fishcock

row 1 (rs): *k3, k2tog, [yo] twice, ssk, repeat from * to last st, k1.

row 2: p1, *p2tog, yo, p2, repeat from * to last st, k1.

row 3: *[p1, k1] twice, repeat from * to last st, p1.

row 4: *p2, k1, p3, k1, repeat from * to last 2 sts, p2.

The network was able to deduce the concept of numbered rows, solely from the texts basically being composed of rows. The system was able to produce patterns that were just on the edge of knittability. But they required substantial “debugging,” as Shane put it.

One user, bevbh, described some of the errors as like “code that won’t compile.” For example, bevbh gave this scenario: “If you are knitting along and have 30 stitches in the row and the next row only gives you instructions for 25 stitches, you have to improvise what to do with your remaining five stitches.”

But many of the instructions that were generated were flawed in complicated ways. They required the test knitters to apply a lot of human skill and intelligence. For example, here is the user BellaG, narrating her interpretation of the fishcock instructions, which I would say is just on the edge of understandability, if you’re not a knitter:

“There’s not a number of stitches that will work for all rows, so I started with 15 (the repeat done twice, plus the end stitch). Rows two, four, five, and seven didn’t have enough stitches, so I just worked the pattern until I got to the end stitch and worked that as written,” she posted to the forum. “Double yarn-overs can’t be just knit or just purled on the recovery rows; you have to knit one and purl the other, so I did that when I got to the double yarn-overs on rows two and six.

This kind of “fixing” of the pattern is not unique to the neural-network-generated designs. It is merely an extreme version of a process that knitters have to follow for many kinds of patterns. “My wrestling with the [SkyKnit-generated] ‘tiny baby whale Soto’ pattern was different from other patterns not so much in what needed to be done, as the degree to which I needed to interpret and ‘read between the lines’ to fit it together,” the user GloriaHanlon told me.

Historically, knitting patterns have varied in the degree of detail they provided. New patterns are a little more foolproof. Old patterns do not suffer fools. “I agree that an analogy with 19th-century knitting patterns is quite fitting,” the user bevbh said. “Those patterns were often cryptic by our standards. Interpretation was expected.”

But a core problem in knitting the neural-network designs is that there was no actual intent behind the instructions. And that intent is a major part of how knitters come to understand a given pattern.

“When you start a knitting pattern, you know what it is that you’re trying to make (sock, sweater, blanket square) and the pattern often comes with a picture of the finished object, which allows you to see the details. You go into it knowing what the designer’s intention is,” BellaG explained to me. “With the neural-network patterns, there’s no picture, and it doesn’t know what the finished object is supposed to be, which means you don’t know what you’re going to get until you start knitting it. And that affects how you adjust to the pattern ‘mistakes’: The neural network knows the stitch names, but it doesn’t understand what the stitches do. It doesn’t know that a k2tog is knitting two stitches together (a decrease) and a yo is a yarn-over (a lacy increase), so it doesn’t know to keep the stitch counts consistent, or to deliberately change them to make a particular shape.”

Of course, that is what makes neural-network-inspired creativity so beguiling. The computers don’t understand the limitations of our fields, so they often create or ask the impossible. And in so doing, they might just reveal some new way of making or thinking, acting as a bridge into the future of these art forms.

“I like to imagine that some of the techniques and stitch patterns used today [were] invented via a similar process of trying to decipher instructions written by knitters long since passed, on the back of an envelope, in faded ink, assuming all sorts of cultural knowledge that may or may not be available,” the user GloriaHanlon concluded.

The creations of SkyKnit are fully cyborg artifacts, mixing human whimsy and intelligence with machine processing and ignorance. And the misapprehensions are, to a large extent, the point.